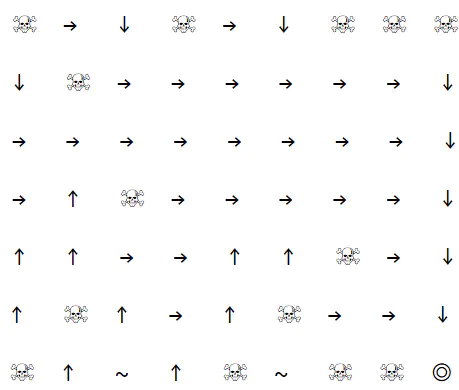

This project provides the code for a Reinforcement Learning agent to learn to navigate any N by M gridworld. The gridworld consists empty slot where an agent can go to, and holes which terminate the path of the agent. The aim is to reach the goal without falling into any holes. Any attempt to leave the gridworld border will force the agent to stay in the same spot. The state transition function for all states is deterministic and follows the agent’s intended movement (i.e. if the agent wishes to move left, it will move left with 100% probability).

The following tabular reinforcement learning algorithms are supported for this gridworld:

- Q Learning

- SARSA

- First-Visit Monte-Carlo with Exploring Starts

- Every-Visit Monte-Carlo with Exploring Starts

- First-Visit Monte-Carlo without Exploring Starts

- Every-Visit Monte-Carlo without Exploring Starts

The following features are also supported for a custom N by M gridworld:

- Generate a gridworld of specified N and M

- Choose the probability of a grid cell being a hole (randomly generated)

- Choose the starting locations of agent and goal

- Depth-First search to ensure random gridworld has a valid path from agent to goal

- Custom epsilon schedule to manage the balance between exploration & exploitation

All instructions for using the code can be found in the .IPYNB file.